FACIAL CODING

Frictionless Measurement of Human Attention and Expression

Realeyes’ patented technology uses front-facing cameras with computer vision to measure the nuances of human attention, reaction, and emotional facial expressions.

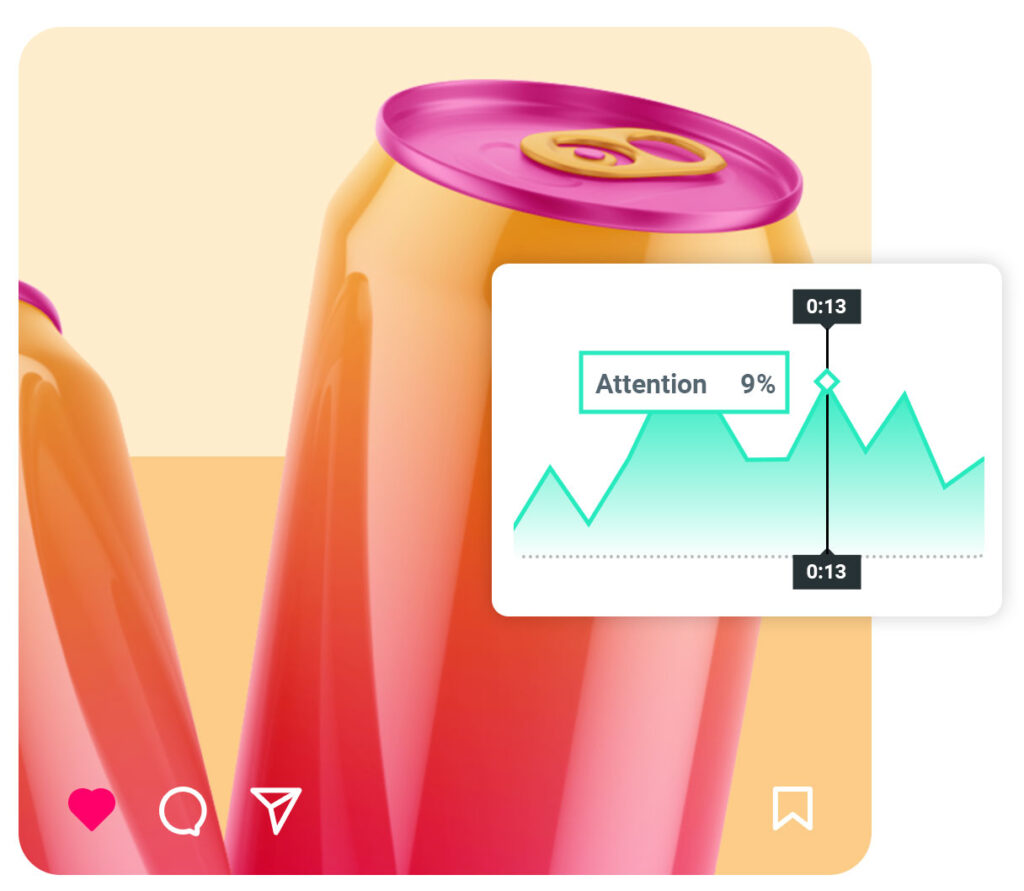

Attention Measurement

Quantifying the focus or interest by tracking head pose, face direction, eye lid openness, and gaze direction in response to stimulus.

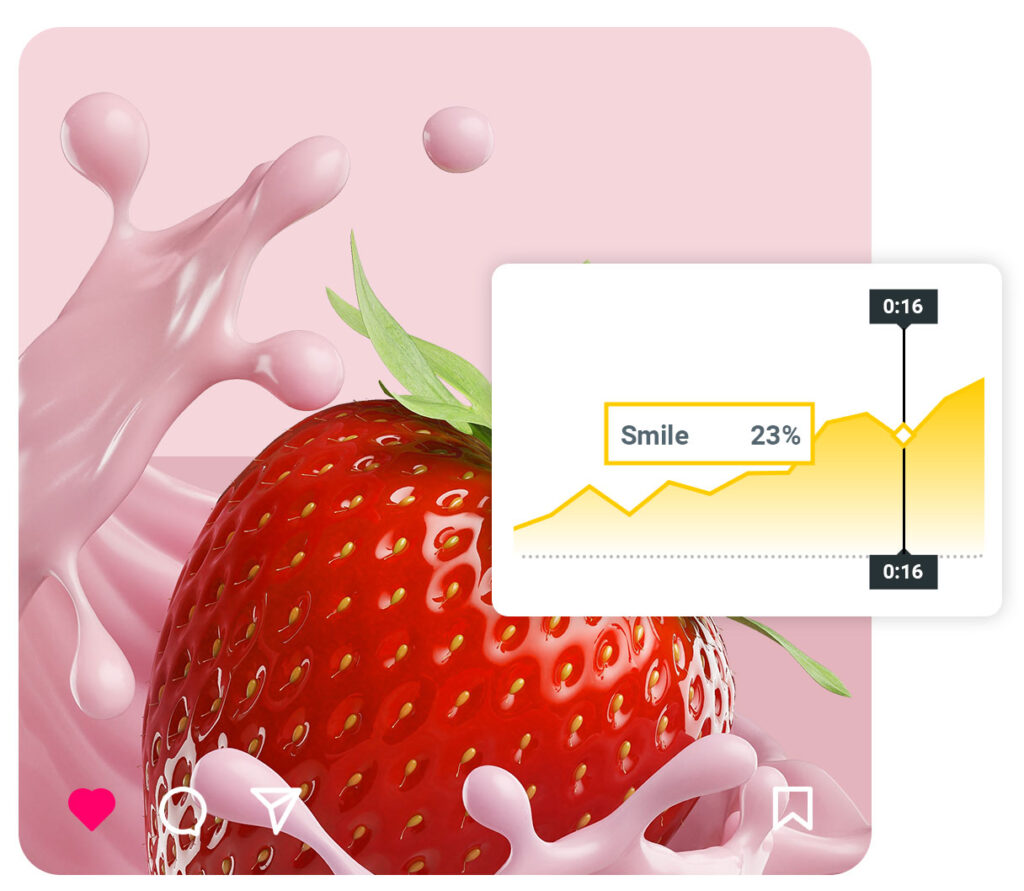

Facial Expression

The movement and configurations of facial muscles, including the movements of the eyebrows, eyes, nose, mouth and cheeks.

Human Level of Accuracy

The training data for our models comes from our proprietary dataset, collected from over 12+ years of developing facial coding models. Camera recordings are collected across different countries, age groups and sexes, to achieve fair and balanced representation globally.

Those recordings are annotated for attention and facial expressions by a large pool of qualified psychologists and annotators. Employing a “wisdom of the crowds” approach, video frames are only labelled when 3 to 7 annotators reach a majority agreement on each specific frame. As an additional step, annotated labels then go through a standardized quality assurance review.

Both people and AI recognize attention and expression in the same way; separating background with the foreground, the ability to focus – and detect the face and its countenance.

Realeyes uses a proprietary Convolutional Neural Network (CNN), a type of deep neural network specifically designed for processing and analyzing images and videos. Data is processed in the cloud in three steps.

Both people and AI recognize attention and expression in the same way...

Face Detection

AI detects the presence of a face by detecting the existence of facial features – not their identity

Face Cropping

AI isolates and extracts the facial features from each camera frame

Measurement

AI uses facial landmarks to track the position and movement of attention and expression

At Home in the Wild

We use ‘in the wild’ datasets, employing machine learning that teach our algorithms to cope with complexities such as poor lighting, heavy shadows, thick facial hair, spectacles, and other occlusions that would typically make attention and expression tracking more challenging.

For each video frame, the AI instantly interprets the information like a human: detecting the existence of a face, separating it from the background, with the ability to focus on facial features, tracking the 3D head position, eye position, palpebral aperture (eyelids) and the shape of its expression.

Additionally, we create a person-dependent baseline, or mean face shape. By measuring expressions as they deviate from a ‘neutral’ face, this accounts for people who naturally look more positive or negative to tolerate any bias.

The performance of our facial coding models is systematically assessed across the following dimensions:

- Partial face occlusions

- Device types

- Head poses

- Countries

- Skin tones

- Genders

- Age groups

Vital Statistics

Patents

15 pending, innovation is at the core

People Recorded

High performance global data collection

Emotion AI labels

Biggest culturally sensitive AI training set in the world

The Realeyes dataset, regarded as one of the largest authentic facial datasets in the world, includes:

• 6 million+ respondents, recorded from 93 countries

• 220k+ hours of video recordings

• GDPR compliant with user opt-ins covering Realeyes’ usage

• Age, Gender, Country, Device Attributes on all data

• 2billion+ annotations: Skin tone, Head Pose, Occlusion, Facial Expressions, Attention, Landmarks, and Facial Attributes

• Annotated by local annotators, to account for cultural nuances

Using that dataset, our computer vision team builds AI models that reproduce or outperform the capabilities of human annotators. These models predict attention and facial expression signals from video recordings without human interference. To avoid biases, models are trained on carefully selected subsets of the whole annotated dataset, with balanced samples across relevant dimensions.

Performance

Our AI is one of the highest performing in the world for unconstrained, real-life situations and light enough to run continuously on most mobile devices

Privacy Safe

Our models are trained on proprietary visual data that has been obtained in accordance with the highest legal, privacy and ethical standards

Fair

Our models have minimal bias across user dimensions such as skin tone, gender and age

Our facial coding technology has been validated through a blind reviewed academic paper and the accuracy and fairness of the models have been independently validated by two major technology and video platforms.